The Generative AI Revolution is Here.

Are you ready? We Are.

For years, Artificial Intelligence (AI) and Machine Learning (ML) have reshaped industries, empowered lives, and tackled complex global issues. These transformative forces, formerly identified as HPC (high-performance computing), have fueled digital transformations across organizations of all sizes, boosting productivity, efficiency, and problem-solving prowess.

The emergence of highly innovative generative AI (GenAI) models, powered by deep learning and neural networks, is further disrupting the game. Increased use of these data- and compute-intensive ML and GenAI applications is placing unprecedented demands on data center infrastructure, requiring reliable high-bandwidth, low-latency data transmission, significantly higher cabling and rack power densities, and advanced cooling methods.

The Backbone of AI – Data Center Ops in the Age of Explosive Growth

Explore the evolution of data centers with Siemon’s latest podcast episode. From legacy systems adapting to modern demands to AI-driven growth reshaping infrastructure, we dive into past, present, and future trends—offering insights on building agile, sustainable, and future-ready data centers.

Read moreAdvanced AI Calls for a Data Center Design Rethink

As data centers gear up for GenAI, users need innovative, robust network infrastructure solutions that will help them to easily design, deploy, and scale back-end, front-end, and storage network fabrics for complex high-performance computing (HPC) AI environments.

NVIDIA Deep Learning Inference Platform Example

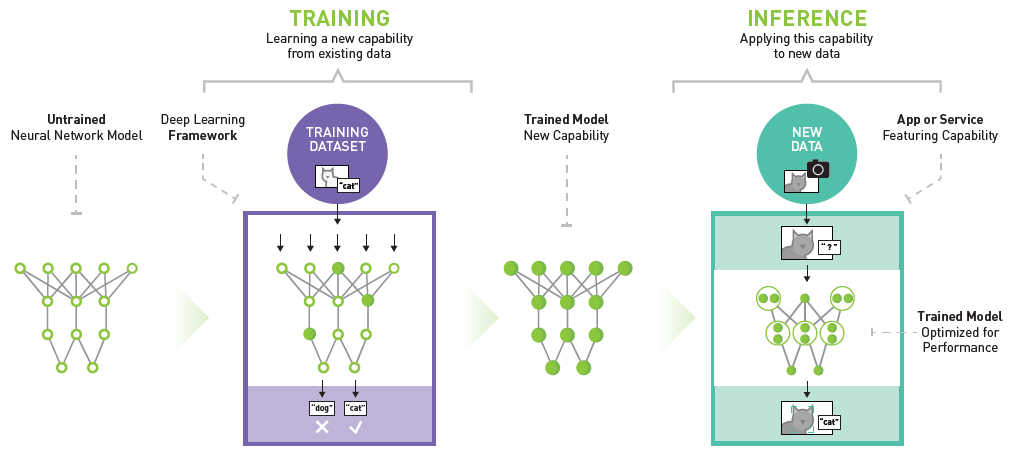

Accelerated GenAI and ML models consist of training (learning new capabilities) and inference (applying the capabilities to new data). These deep-learning and neural networks mimic the human brain’s architecture and function to learn and generate new, original content based on analyzing patterns, nuances, and characteristics across massive, complex datasets. Large language models (LLM), such as ChatGPT and Google Bard, are examples of these GenAI models trained on vast amounts of data to understand and generate plausible language responses. General-purpose CPUs that perform control and input/output operations in sequence cannot effectively pull vast amounts of data in parallel from various sources and process it quickly enough.

Therefore, accelerated ML and GenAI models rely on graphical processing units (GPUs) that use accelerated parallel processing to execute thousands of high-throughput computations simultaneously. The compute capability of a single GPU-based server can match the performance of dozens of traditional CPU-based servers!

NVIDIA Solution Advisor

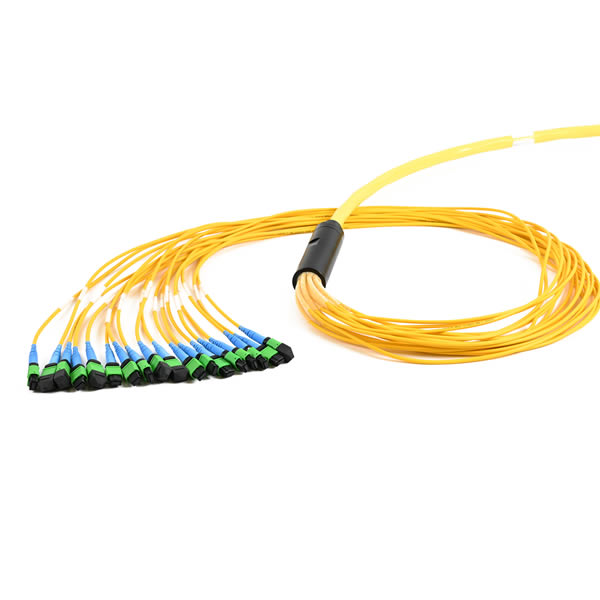

Siemon Announces Optical Patching Solutions for GenAI Networks Using NVIDIA Accelerated Computing.

On-Demand TechTalk: What is the Impact of Generative AI on Your Infrastructure?

Our GenAI experts provide much-needed clarity on this fast-changing subject, showcasing demonstrable examples of how to adapt your network architecture designs to best meet the requirements for training and inferencing.

Read moreSiemon is AI-Ready

Siemon is at the frontier of the GenAI revolution and through collaboration with our customers and partners, who are at the forefront of delivering these technologies, we’ve developed a range of next-generation AI-Ready solutions that are ready to support your deployments.

Emerging AI Data Center Network Architectures and Applications

Explore Siemon’s cutting-edge AI-ready solutions designed for emerging data center architectures. Discover high-performance cabling and connectivity for seamless AI and HPC workloads.

Read more<meta name="addsearch-custom-field" content="source=web" />

<meta name="addsearch-custom-field" data-type="text" content="lang=en" />

<meta name="addsearch-custom-field" data-type="text" content="regions=North America;regions=Europe Central Asia;regions=Asia Pacific;regions=India Middle East Africa" />

<meta name="addsearch-custom-field" data-type="text" content="category=environment" />